The next-generation of devices using high-bandwidth-memory (HBM2) will be able to leverage even faster memory chips thanks to an update by Jedec, which increases the per-pin bandwidth by 2.4Gbps. The bandwidth is delivered across a 1,024-bit bus, with eight independent channels leading to a total feasible bandwidth of as much as 307GBps.

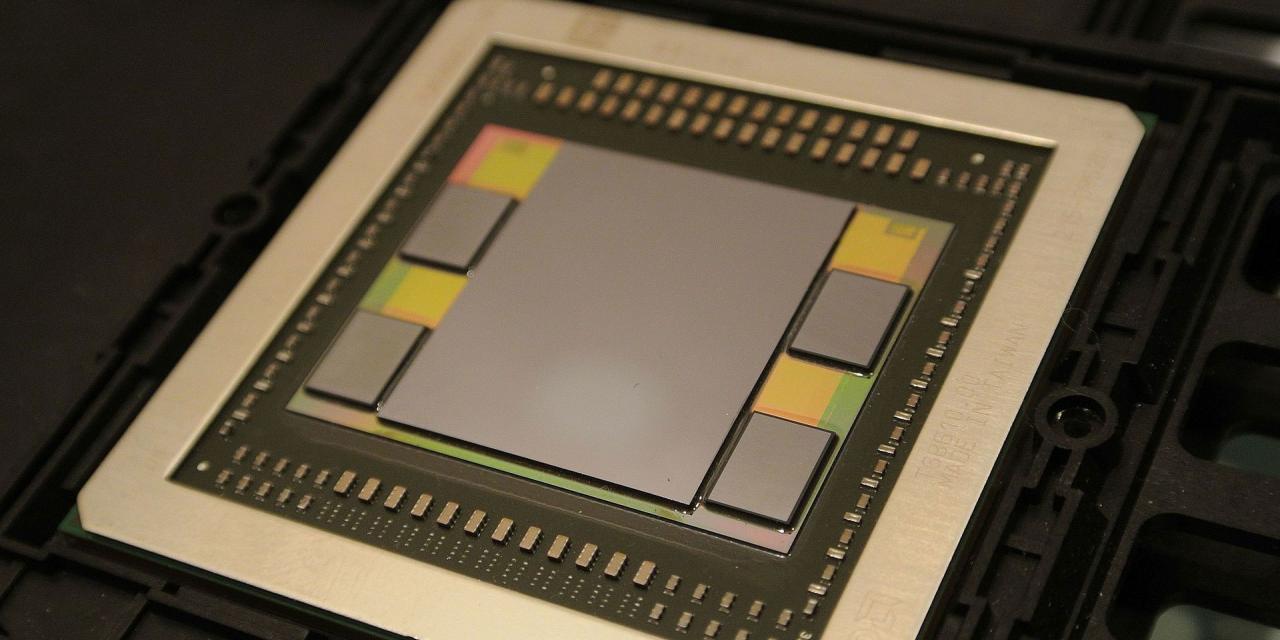

HBM has been used in graphics cards since the 2015 launch of AMD's Fury line of GPUs. It offered excessive bandwidth compared to traditional GDDR5, but due to its high-cost and limited availability at the time it hasn't made its way into many GPUs over the past few years. Most recently Nvidia opted to use fast, but no way near as fast, GDDR6 memory. HBM is more commonly used in enterprise cards, but is still found within AMD's Vega cards and its Ryzen APUs.

The new chips' speed of 307GBps needs some context to show how much of an improvement it is. It's almost three times as fast as the first-generation of HBM, and a full 20 percent faster than the maximum capable HBM2 chips available today. It's not quite the half a terabyte a second speeds we expect to see out of HBM3 sometime in 2020, but it acts as a solid stopgap that could lead to far greater memory performance with future supporting devices.

Called JESD253B, the new HBM2 standard not only increases speed, but can handle larger capacities of memory too. As Hexus reports, it can be mounted so that there are 24GB of memory per package. It still supports smaller capacities though, with as little as 1GB per stack if that is preferable.

Image source: /Wikipedia/PCGamesHardware